Google’s DeepMind and Google Cloud have unveiled a new tool that will help them better identify when AI-generated images are being used, according to an Aug. 29 blog post.

Currently in beta, SynthID aims to combat the spread of misinformation by adding an invisible, permanent watermark to images to identify them as computer-generated. It is currently available to a limited number of Vertex AI customers using it Image, one of Google’s text-to-image generators.

This invisible watermark is embedded directly into the pixels of an image created by Imagen and remains intact even if the image undergoes changes such as filters or color changes.

In addition to just adding watermarks to images, SynthID uses a second approach where it can assess the likelihood of an image being created by Imagen.

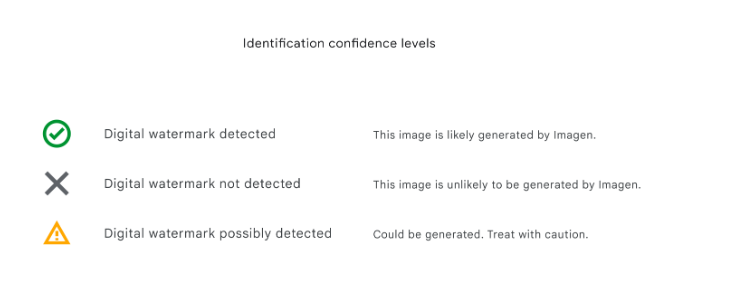

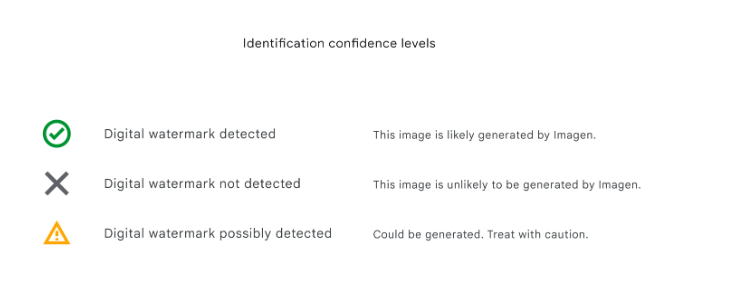

The AI tool offers three ‘confidence levels’ for interpreting the results of digital watermark identification:

- “Detected” – the image was probably generated by Imagen

- “Not Detected” – the image is unlikely to be generated by Imagen

- “Possibly Detected” – the image can be generated by Imagen. Be careful.

In the blog post, Google mentioned that while the technology “isn’t perfect,” the tools’ internal tests have shown accuracy in many common image manipulations.

As a result of advances in deepfake technology, tech companies are actively looking for ways to identify and flag manipulated content, especially when that content disrupts the social norm and causes panic – such as the fake image of the Pentagon bombing.

The EU is of course already working on implementing technology through its EU Code of Practice on Disinformation, which can identify and label this type of content for users of Google, Meta, Microsoft, TikTok and other social media platforms. The Code is the first self-regulatory piece of legislation designed to motivate companies to work together on solutions to combat disinformation. When the Code was first introduced in 2018, 21 companies had already agreed to commit to this Code.

While Google has taken its unique approach to addressing this challenge, a consortium called the Coalition for Content Provenance and Authenticity (C2PA), backed by Adobe, is leading the way in digital watermarking efforts. Google previously introduced the “About This Image” tool to provide users with information about the provenance of images found on its platform.

SynthID is simply a new next-generation method that allows us to identify digital content and acts as a sort of “upgrade” to the way we identify a piece of content through its metadata. Because SynthID’s invisible watermark is embedded in the pixels of an image, it is compatible with these other metadata-based image identification methods and is still detectable even if that metadata is lost.

However, with the rapid advancement of AI technology, it remains uncertain whether technical solutions such as SynthID will be fully effective in addressing the growing challenge of disinformation.

Editor’s note: This article was written by a staff member of nft now in collaboration with OpenAI’s GPT-4.