In a world where traditional social media platforms dominate the digital conversation, do decentralized alternatives emerge as a promising counterbalance to censorship or a breeding ground for hate speech?

BeInCrypto talks to Anurag Arjun, co-founder of Avail, a blockchain infrastructure pioneer who is passionate about how decentralization can potentially transform online speech and governance.

Decentralized social media faces moderation and privacy challenges

In October, X (formerly Twitter) suspended Iranian Supreme Leader Ali Khamenei’s Hebrew-language account for “violating platform rules.” The post in question commented on Israel’s retaliatory attack on Tehran, reigniting global debates about the power centralized platforms have over public discourse.

Many wondered: Could it be that a country’s supreme leader is not allowed to comment on airstrikes taking place within its own borders?

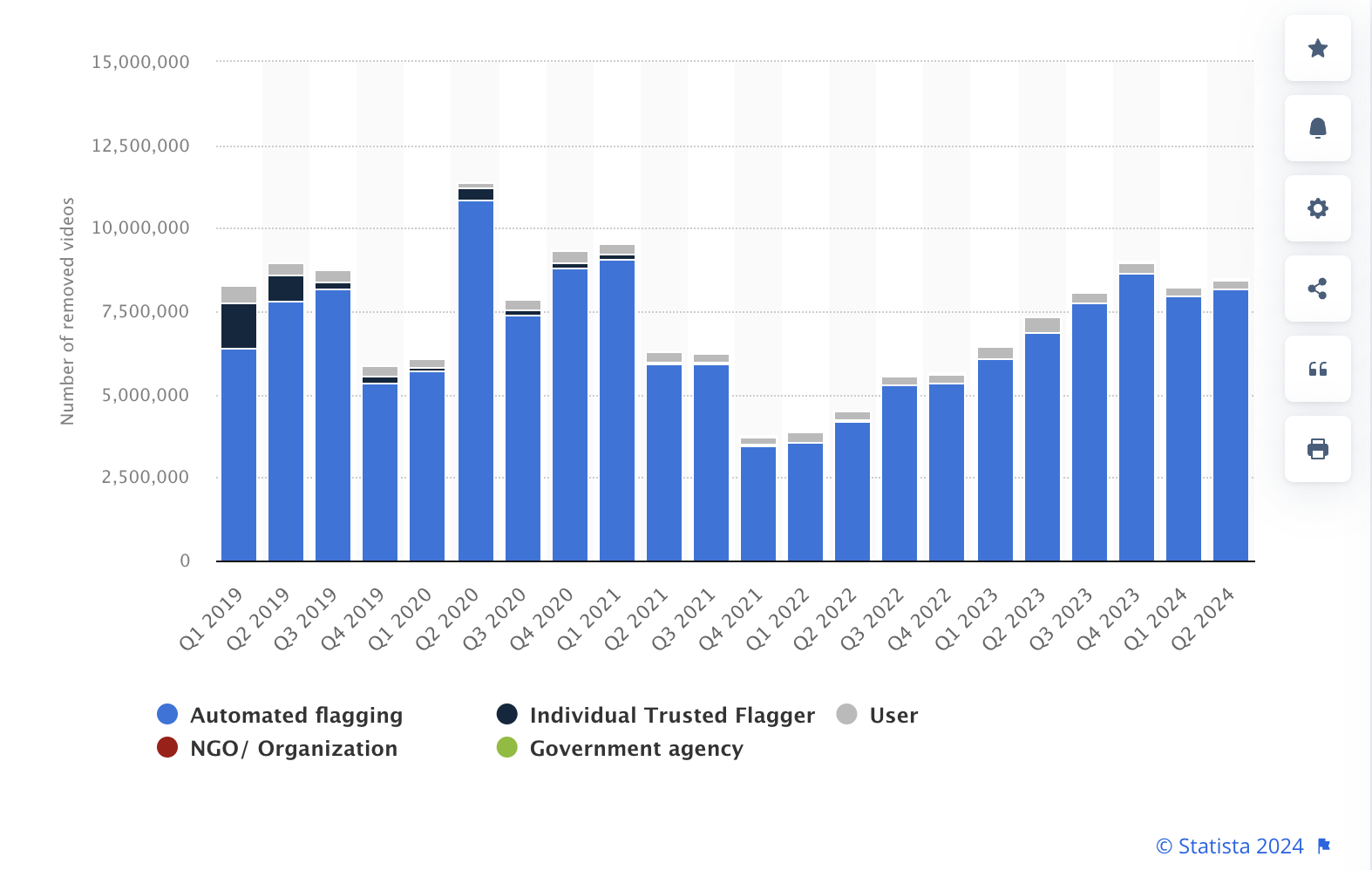

Political sensitivity aside, the same thing happens all the time to everyday creators in contexts where the stakes are much lower. In the second quarter of 2024, YouTube’s automated flagging system removed about 8.19 million videos, while user-generated flagging removed only about 238,000 videos.

YouTube removed videos from 2019 to 2024. Source: Statista.

In response, decentralized platforms such as Mastodon and Lens Protocol are gaining popularity. Mastodon, for example, saw an increase of 2.5 million active users since Elon Musk’s acquisition of Twitter in November 2022. These platforms promise to redistribute control, but this raises complex questions about moderation, accountability and scalability.

“Decentralization does not mean the absence of moderation – it is about shifting control to user communities while maintaining transparency and accountability,” Anurag Arjun, co-founder of Avail, told BeInCrypto in an interview.

Decentralized platforms are intended to remove companies’ influence on online expressions. These platforms allow users to define and enforce moderation standards themselves. Unlike Facebook and YouTube, which face accusations of algorithmic bias and shadow banning, decentralized systems claim to promote open dialogue.

Although decentralization removes control at one point, it certainly does not guarantee fairness. A recent Pew Research Center survey found that 72% of Americans believe social media companies exert too much power over public debate.

This skepticism applies to decentralized systems, where governance must remain transparent to prevent louder voices from monopolizing the conversation.

“Distributed governance ensures that no individual or company unilaterally decides what can or cannot be said, but it still requires safeguards to balance different perspectives,” explains Arjun.

Community-led moderation challenges

Without centralized oversight, decentralized platforms rely on community-driven moderation. This approach hopes to ensure inclusivity, but also risks fragmentation when consensus is difficult to achieve. Mastodon instances often have different moderation rules, which can confuse users and put communities at risk.

Wikipedia is a great example of successful community-led moderation. It trusts on 280,000 active editors to maintain millions of pages worldwide. Transparent processes and collaboration between users build trust while protecting free expression.

“Transparency in governance is essential. It prevents exclusion and builds trust among users, making everyone feel represented,” says Arjun.

Decentralized platforms face the challenge of balancing freedom of expression with controlling harmful content such as hate speech, disinformation and illegal activities. A high-profile example is the controversy surrounding Pump.fun, a platform that enabled livestreams for meme coin promotions.

Misuse of this feature led to malicious broadcasts, including self-harm threats related to cryptocurrency price fluctuations.

“This highlights a crucial point. Platforms need layered governance models and evidence verification mechanisms to tackle harmful content without becoming authoritarian,” explains Arjun

The seemingly obvious solution is to use artificial intelligence. While AI tools can identify malicious content with up to 94% accuracy, they lack the nuanced judgment needed for sensitive cases. Either way, decentralized systems must combine AI with transparent, human-led moderation for effective results.

So the question remains: how do you protect people from harm or how can you enforce any form of regulation without first agreeing on what constitutes foul play? And what would the community turn itself into if it successfully policed itself organically?

Governance and new censorship risks

Decentralized governance democratizes decision-making, but introduces new risks. Voting systems, while participatory, can marginalize minority opinions, replicating the very issues that decentralization seeks to nip in the bud.

For example, on Polymarket, a decentralized prediction platform, majority voting has sometimes suppressed dissent, demonstrating the need for safeguards.

“At a time when centralized control of information is a systemic risk, prediction markets provide a way to cut through misleading narratives and see the unvarnished truth. Prediction markets are freedom-preserving technology that moves societies forward,” said a blockchain researcher on X (formerly Twitter).

Transparent appeal mechanisms and checks on majority power are crucial to prevent new forms of censorship. Decentralized platforms prioritize user privacy, giving individuals control over their data and social graphs.

This autonomy strengthens trust, as users are no longer at the mercy of corporate data breaches, such as Facebook’s 2018 Cambridge Analytica scandal, which exposed data from 87 million users. In 2017, 79% of Facebook users trusted Meta with their privacy. After the scandal, this number dropped by 66%.

User trust on Facebook from 2011 to 2017. Source: NBC

However, privacy can complicate efforts to address harmful behavior. This ensures that decentralized networks remain secure without compromising their core principles.

Arjun explains: “Privacy should not come at the expense of responsibility. Platforms must implement mechanisms that protect user data while enabling fair and transparent moderation.”

Legal and regulatory issues in decentralized social media

A primary challenge for decentralized platforms is addressing legal issues such as defamation and incitement. Unlike centralized systems like X, which receive There are 65,000 requests for government data every year, but decentralized platforms lack clear mechanisms for legal redress. Arjun emphasizes the importance of collaboration between platform makers and legislators.

“Involving regulators can help establish guidelines that protect users’ rights while maintaining the ethos of decentralization,” he says.

In authoritarian regimes, decentralized platforms offer an opportunity to resist censorship. For example, during the Mahsa Amini protests in Iran, 80 million users were affected by government-led internet shutdowns, highlighting the need for censorship-resistant networks. While decentralized platforms are harder to shut down, they are not immune to external pressure.

“Decentralization provides robust tools for resistance, but individual users remain vulnerable. Platforms must provide additional protections to protect them from prosecution. “Decentralization started as a user empowerment movement. To support that vision, platforms must prioritize inclusivity, transparency and technological innovation,” concludes Arjun.

Overall, the future of decentralized social media depends on tackling these hurdles with creativity and collaboration. If successful, decentralized platforms could redefine the dynamics of online speech and provide a freer and more resilient ecosystem for expression.

The question is not whether decentralization can work, but whether it can evolve to balance freedom and responsibility in the digital age.